A visionary UX designer, Jaime Levy has garnered recognition for her exceptional creativity, innovation, and contributions to the field of user experience design but most importantly pioneering the early internet era of online content creation and editing. With an unwavering passion for blending technology, design, and storytelling, Levy has shaped the digital landscape and redefined the way users engage with products and services.

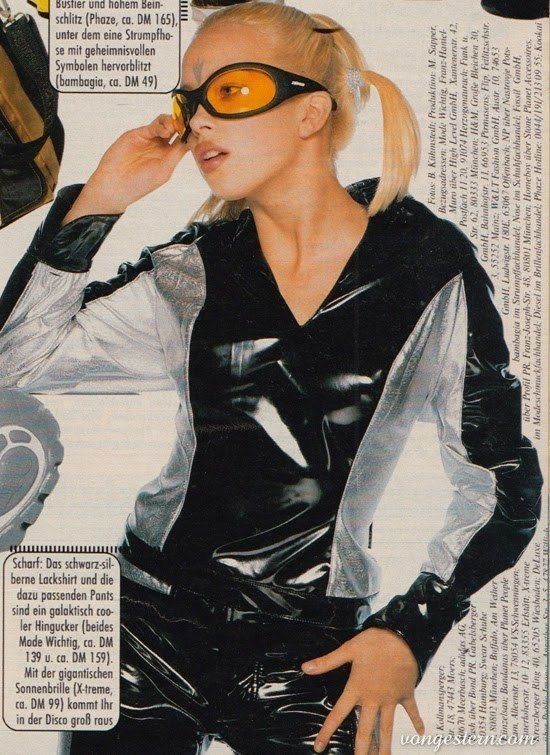

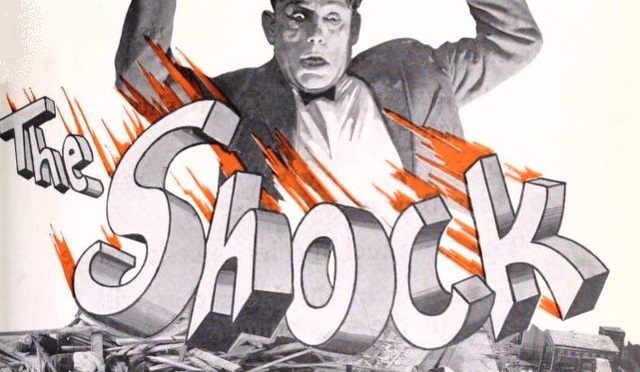

It was a period of changes and of cyberpunk, the Berlin Wall was no more, the Soviet Union vanished a few years later, and the web ascended upon individuals creating a new way of connecting and producing contents with a multitude of services. The revolution of the internet was a high wave a few daring individuals had the ability to surf.

Jaime Levy was born in Hollywood California attending San Francisco University State University graduating with a B.A., then obtained her master in interactive telecommunication program from NY University of Tisch School of the Arts.

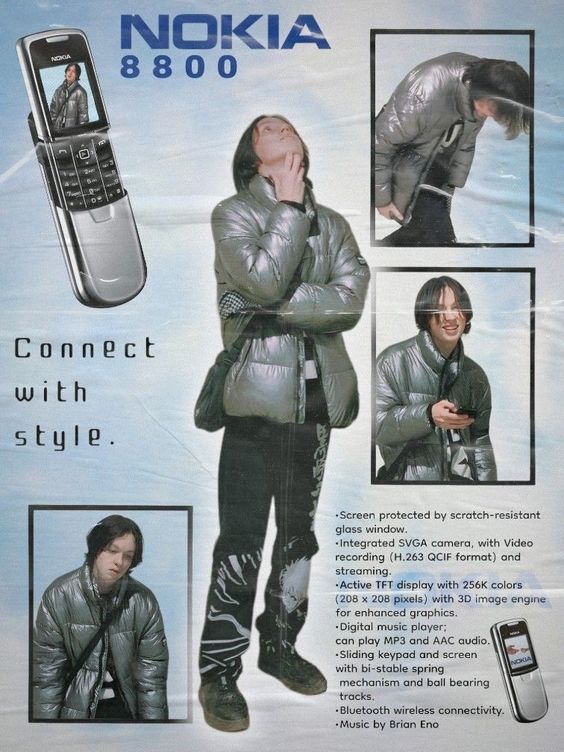

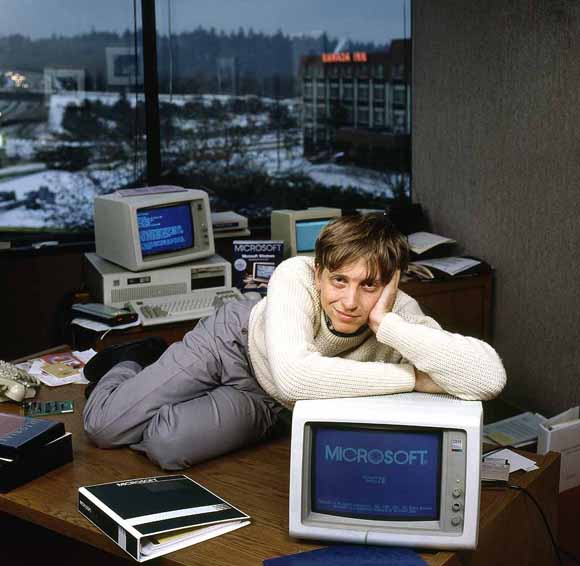

The 90s were the commercial blooming years for Silicon Valley with a crescendo of computer companies investing in California. The new medium was the computer having become a staple appliance across many households in the Unites States.

Jaime Levy understood the potential of the web not just as a communication tool, but as a leverage to deploy a whole new meaning of products and services from advertising to web development, including the distribution of digital goods bypassing the traditional channels.

The 90s were fostering the idea how the web would spawn a whole new meaning of digital interactions and content consuming. The internet was aiming at replacing the old medium like the press and TV, and slowly everyone was turning their head at the marvel of creating virtual products without involving industrial capabilities.

This is the era of the Web 1.0 where the user mainly acquired information using html documents as an extension of the press, libraries, and book shops. However, Jaime didn’t like playing by the rules and pushed ahead to distribute her works to all computer owners.

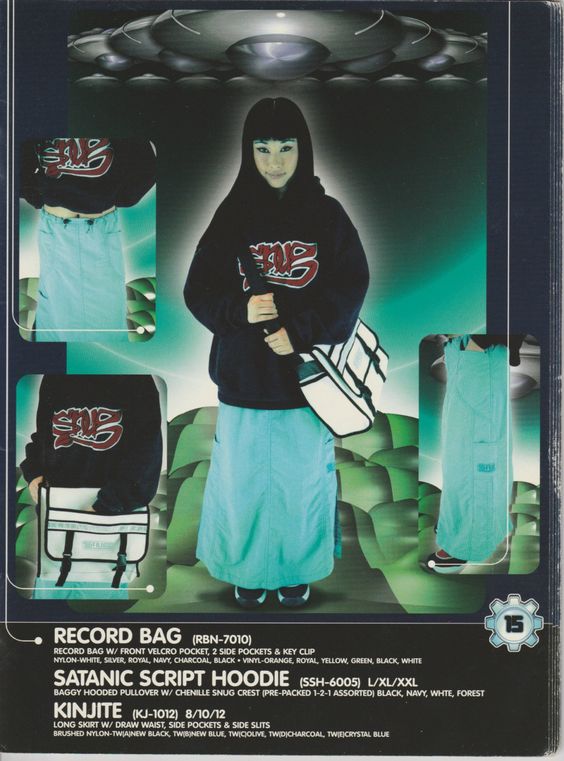

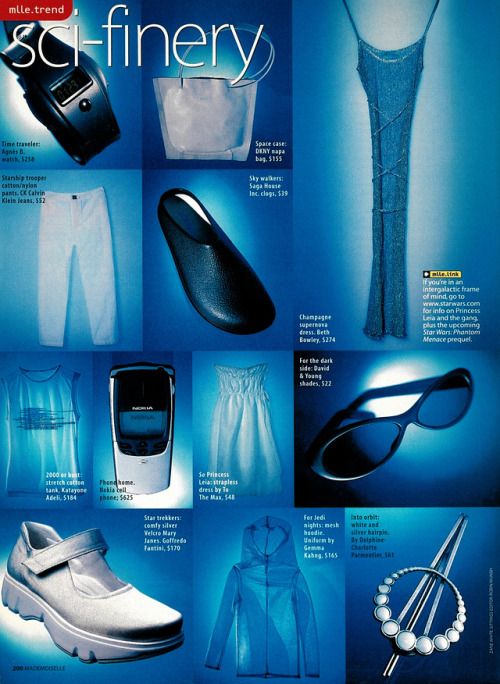

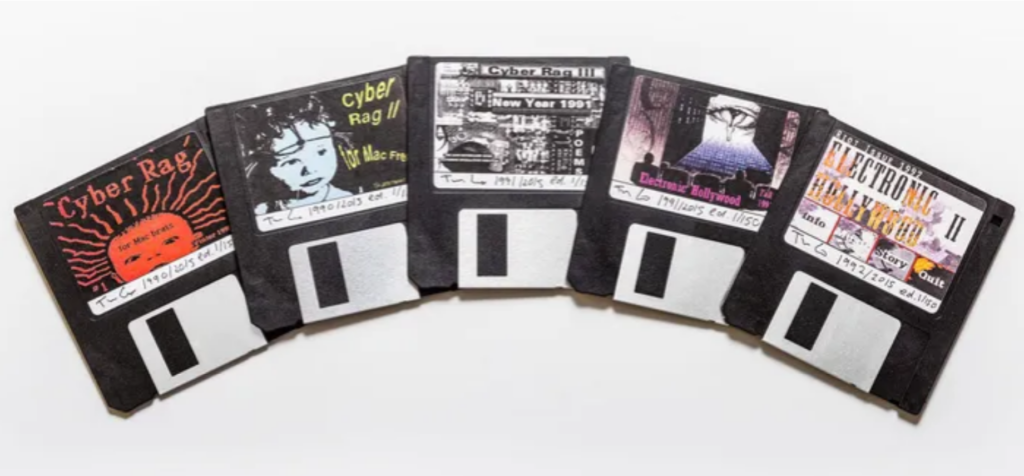

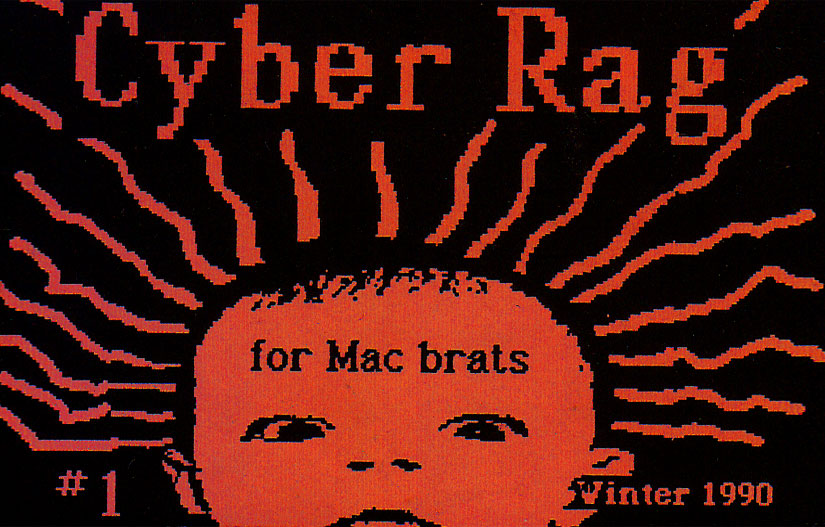

Jaime created and published the e-zine named Cyber Rag, a digital alternative and cyberpunk magazine on a floppy disk in the first years of the 90s. The magazine is part of her artistic counter-culture production and a major statement of early digital art in public galleries. Her creativity as a digital artist went onto producing in 1993 an interactive press kit for Billy Idol’s album titled Cyberpunk.

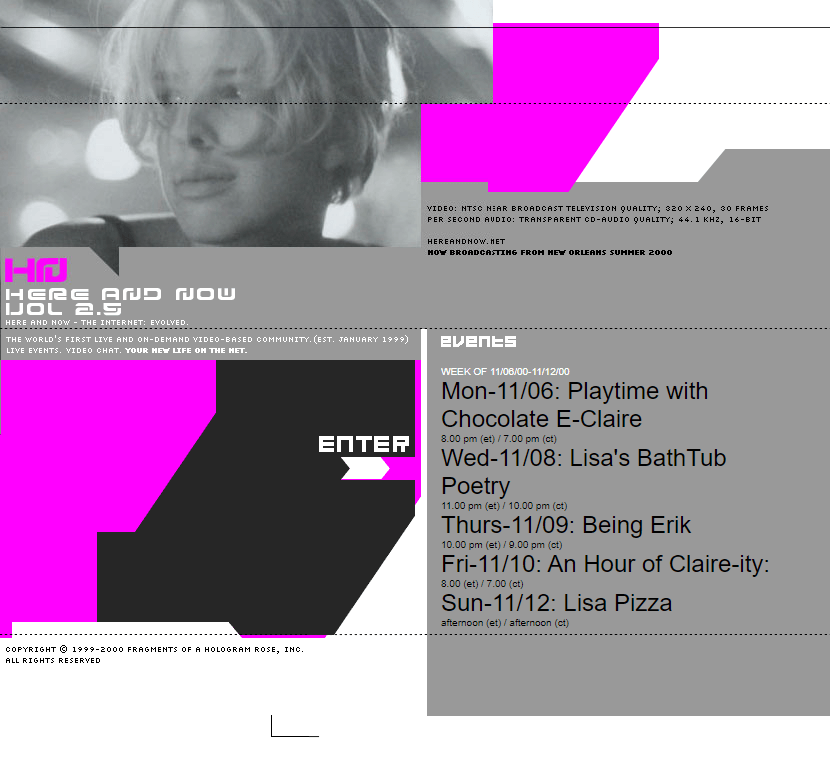

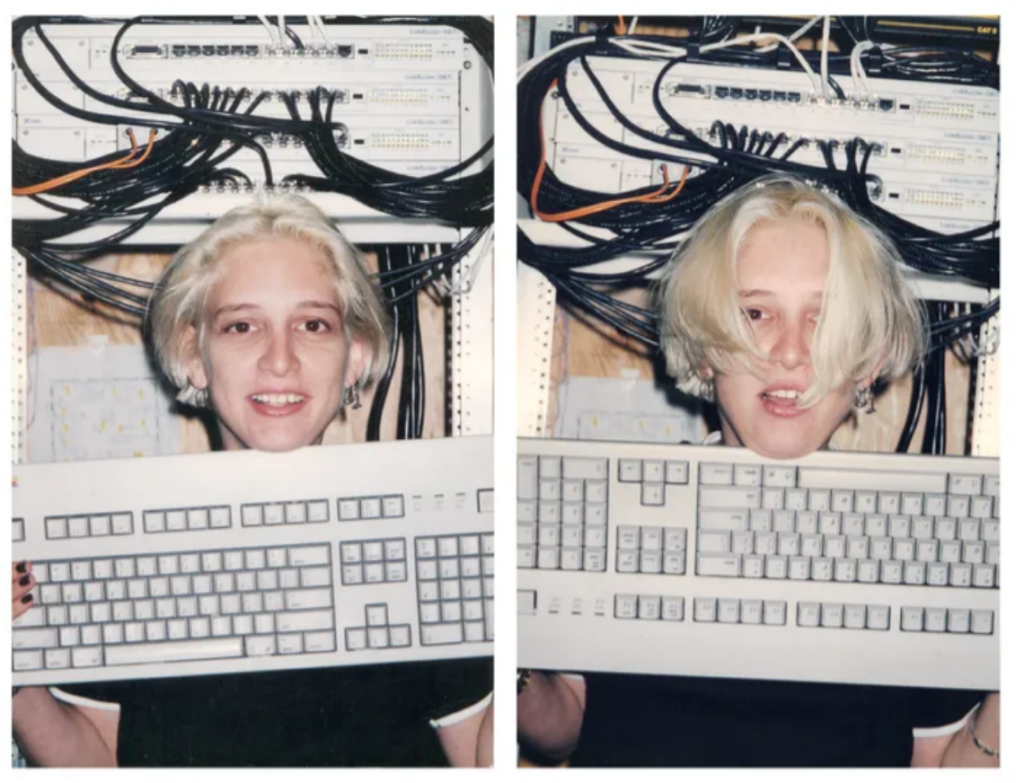

In 1994 Jaime was working at IBM as a UI designer while at the same time producing an animated series called Cyber Slackers, and it’s here we see a major contribution of online content as one of the earliest productions seen on the web. This idea were coming from brainstorming sessions Jaime would have with her friends while in New York, creating new things way ahead of their time getting inspired by real life.

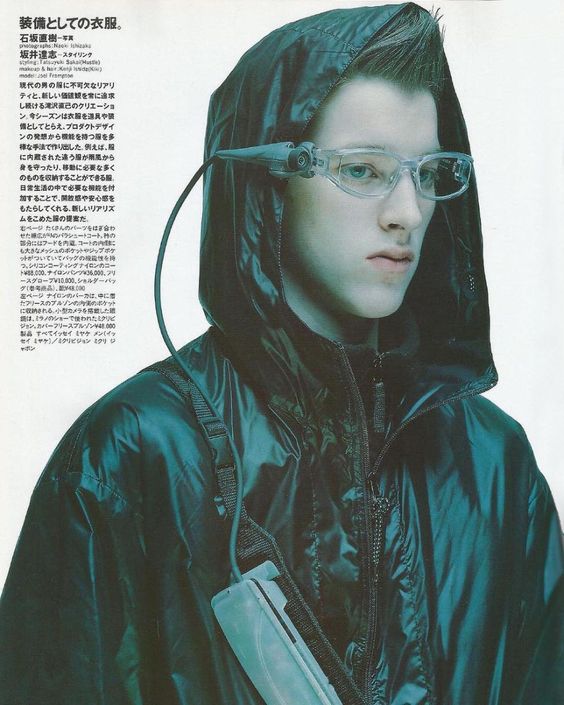

It was while at IBM Jaime was introduced to their web browser Mosaic for the first time, understanding how the importance of dynamic content would play in favor of her creativity, so she begun exploring HTML and followed that path boosting her career and work output.

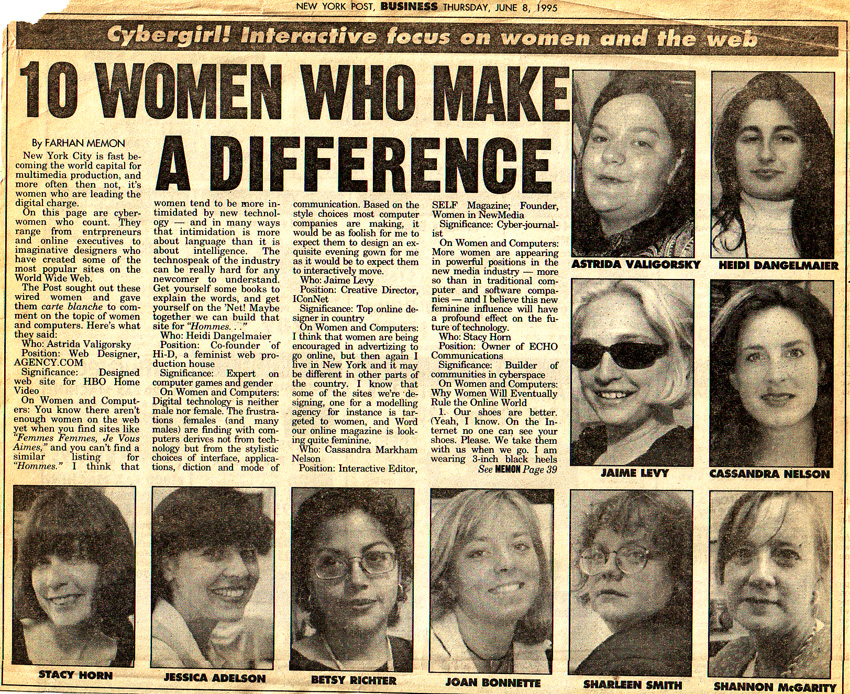

She landed a creative director position a year later at Icon CMT, there Jaime started creating Word.Com online magazine receiving praise and recognition for the structure content. Her constant effort and creativity eventually allowed her to be recognized by Newsweek magazine in the Top-50 people in cyberspace, then features on TV in Good Morning America as The Most Powerful Twenty-somethings int he US.

Having the ability to read a magazine online was unique for the time. Web pages that were usually static and written just with HTML (CSS came later), were suddenly enhanced with animations and sound making interactivity the main feature of these contents. Web users were exposed to new type of media giving them the power to be in control of what they were reading.

Looking back at Jaime’s accomplishments it’s possible to see how much she pushed the envelope of digital creativity, and how successful she was in influencing Silicon Valley with her ability to develop content and leverage the web as a major distributing platform.

Thirty years ago the general consensus towards the internet was limited considering it a thing for nerds, something quite of a niche involving specific groups of people dedicated to tech stuff, nowhere as close as radio, TV, press, with their coverage and influence. Along with other women in tech, she was featured multiple times on newspaper and magazine for her ability to change the status-quo of the cyber space.

As a pioneer of interface design and early digital online contents, Jaime continuously experimented with her work. A roller coaster of opportunities and failures chiseled her career by becoming an influential creative before anything else. At heart she is an artist experimenting with technology, pouring out the postmodernism narrative of the newly globalized society using computers to change the culture.

As the 90s flipped into their second half things started to change for Jaime, and after leaving Word.Com confident in finding a new role in another company to continue innovating the web, but unfortunately it didn’t go as planned as the drums of the commercial internet phenomena started to drown in noise all her work and creativity. There was a struggle to create more and more relevant content as the web grew exponentially quarter after quarter.

After looking around she moved back to California in LA with a deep sense of uncertainty, leaving behind New York and major efforts in Cyber Slackers with her published content and art works. From a nice Manhattan loft to somewhere in the city of angels performing freelance gigs.

Perhaps returning home was a rushed decision, some of her friends and former colleagues remained in New York as the east coast of the US started becoming the second hub of tech innovation: several design companies such as Razorfish would become important players in this industry. Jaime did reflect upon this, asking herself if she would fit or if these companies were ready to handle her.

But why didn’t we see any of Jaime’s works in the last years or did we hear of her? The majority of her creations were stored into mediums we no longer use, they haven’t been distributed beyond their original format and the last time I used a floppy disk was 2003. Jaime’s work isn’t for the masses, it’s a dedicated crafted art that found through the digital medium its purpose, also cyberpunk wasn’t for everyone and as a movement it was dwindling down yielding to other socio-cultural happenings. But also the Dot Com Bubble had just blown crashing the markets and creating a black hole where company investments suddenly disappeared into thin air.

In 2016 I was searching for some reading material on UX design and many online users were suggesting UX Strategy by Jaime Levy, published by O’Reilly Media (ISBN 1449373003). The book is a very good set of information that are well explained and layered out, illustrating with real cases how to create experiences for brands.

After finishing the book and taking many notes and sticking plenty of bookmarks across pages, I went on with on with my life leaving the book on my shelf. I never bothered searching for the author how I usually do, and then forgot about it.

It’s 2023 and out of curiosity decided to read Jaime’s book again gliding over the highlighted sections to test my knowledge. The book still stood solid despite the passage of time. A second edition and updated edition was published two years ago by the same company (ISBN 9781492052432 ), that is next on my purchase book and eager to see what new strategies she implemented. This is how I discovered Jaime Levy and her work deciding to write about on this blog.

Jaime’s career spans over thirty years of digital creative publishing and innovation that have influenced the early days of the web. Perhaps ahead of its time just like the avantgarde artists that often tend to see the future before anyone else. Getting things right and wrong is the duty of these artists without the need to be apologetic.

Jaime has continued her career in UX design providing innovation and strategy to companies like IBM, Huge, Cisco, and many more. She has been teaching at various universities in California and New York contributing with her knowledge to shape the experience-making in product design.